I receive a bunch of questions in reference to my 1080P Does Matter article. Some of the questions are along the lines of “Do I really lose the benefits of 1080p if I sit too far away from a small screen? How? The lines of resolution don’t go away.” While the lines are still there, you’re eye can’t detect them, but I haven’t found a simple way to explain this. Then I came across this article and picture (via digg) that demonstrate a great, simple-to-explain example.

quoting http://bloggerwhale.blogspot.com/2007/03/dont-trust-your-eyes.html#

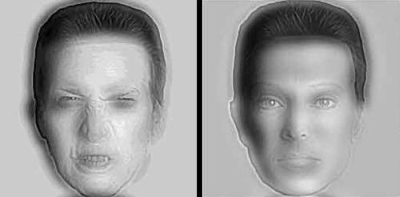

In the pic below you can see two faces. A normal face on the right and a wicked one on the left. Now get up from your chair and walk some 8 steps back and see the magic. The two faces interchange their positions.

This is a great example of when you can perceive the available resolution and when you can’t. Up close, the fine lines (wrinkles) in the left picture are very apparent, but become smooth as you back away; the shadows under the squinted eyes look like the eyes. The fine lines defining the lips and eyes in the right picture become less apparent with distance and the shadows below the mouth become the mouth; the shadows above the eyes become part of the eyes.

To summarize, pretend you’re viewing this picture on a HDTV and the picture and screen resolution is 1920×1080. You’re getting the full benefit of 1080p if you sit close enough to your TV to see a wicked face on the left and normal face on the right. If you sit far enough away that you see a normal face on the left and a wicked face on the right, you could go with a lower resolution TV.

I get the same effect by taking off my glasses (the two faces switching demeanor that is).

Since I wear my glasses when I watch tv and my livingroom, 720 will work for me. Not that I’m not eyeballing the 1080 sets, I just can’t justify it now.

I read the comments follwong the article “1080P Does Matter article” and was astounded at the fact that people just couldn’t grasp the idea that ocular perception (eyesight) was the limiting factor when it came to a certain point in the distance/resolution tests.

I am a scientist and I know I have an advantage when it comes to reading charts and graphs due to my training and experience. However, some of the information seemed common sense to me, one in particular. The fact that at some given distance it doesn’t matter what resolution is being displayed it will all look the same. Basically, if you’re far enough away…all resolutions will look like crap.

Seemed simple to me.

PS -Taking off my glasses with the pictures above worked for me too….LOL.

The only thing left now is to find a way to make the tv shops play this picture when you are buying your new hdtv 🙂

The image test is helpful to determine whether a 720p or 1080p is best for viewing. However, doesn’t some of the newer technology in the 1080p sets enhance the picture’s color/contrast/sound) and therefore make the 1080p set a better choice? Compare for instance two Pansonic 42″ plasma sets (TH-42PX75U vs TH-42PZ700U)and notice the speaker system, number of color shades and input/output categories.

I’ve seen stores putting a 42″ 720p plasma set (TH-42PX75U) next to a 50″ 1080p plasma set (TH-50PZ700U). Guess which one was getting the attention.

The 4096 shades of colors was the main eye catcher.

-martin

It has seemed to me that upconversion of resolution to the screen res is inherently more accurate than downconversion to the screen. That is, where are the edges located in an image? In the jaggies? downconversion from, say, 1440 to 720, with an edge between the two 1440 pixels, then it’s a toss where to put the edge in the 720 image. If so, could there may be an advantage to having a screen that can display the highest intended source resolution to be displayed. Or, are the jaggies as invisible as the pixel outlines are beyond the distance of discrimination? Or, is that something that can be anti-aliased away?

Great site; I just stumbled on it via some wild and crazy google-surf-google-surf…etc.