Amazon S3 (Simple Storage Solution) is a great way to store (and optionally share) large amounts of data. It’s cheap, fast, and reliable. If you’re a casual user, you may not know that you do much more than just store and sever data of HTTP, as long as you use an advanced application such as Bucket Explorer (or read through the S3 documentation and code it yourself.) Here’s a list of 9 features you may not have known about.

- Torrent Tracking and Seeding: Amazon S3 can server as an ultra-reliable torrent tracker; share/seed the files from your local PC and let S3 act as the tracker. Or let S3 handle both the seeding and the tracking. Here are the details on how to do it.

- Enable / Disable directory browsing: If you are sharing files of HTTP, you may or may not want people to be able to list the contents of a bucket (folder.) If you want the bucket contents to be listed when someone enters the bucket name (http://s3.amazonaws.com/bucket_name/), then edit the Access Control List and give the Everyone group the access level of Read (and do likewise with the contents of the bucket.) If you don’t want the bucket contents list-able but do want to share the file within it, disable Read access for the Everyone group for the bucket itself, and then enable Read access for the individual files within the bucket.

- Prevent the contents of a bucket from being indexed by a search engine: If you don’t want the contents of a bucket to be indexed by Google and company, place a file named Robots.txtin the bucket and share it with Everyone. (This trick works for any webserver.) The file needs to contain the following two entries to prevent indexing:

- User-agent: *

- Disallow: /

- Use your own domain name: You can use your own domain name (http://s3.carltonbale.com) instead of the default Amazon URL (http://s3.amazonaws.com/s3.carltonbale.com/). You just need to edit your DNS settings. Here are the details on how to do it. Bucket Explorer has an option to “Use bucket name as virtual host” to make sharing files in this manner even easier.

- Temporarily share a file using an auto-expire link: You many want to allow someone to download a file but prevent them from sharing the link and having 100’s of people download it as well. With S3, it’s possible to create a link that expires after a defined period of time expires. The easiest way to do this using Bucket Explorer. First, make sure that the file has permission set so that it is not readable by everyone, since temporary access is what you’re going after in the first place. Then select the file, right-click, and select Generate Web URL. Select Signed URL and specify the expiration date. Then send the URL to the person with which you are sharing the file

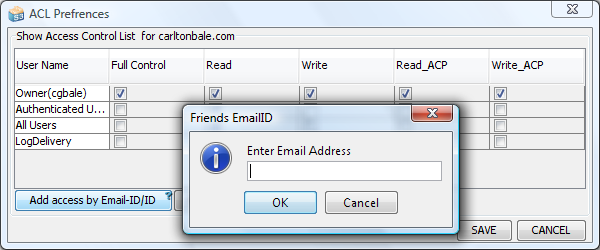

- Share your bucket with someone. The Third-party bucket features allows you to share the contents of a bucket. In Bucket Explorer, right-click on the bucket and select “Update bucket’s access control list…” and set the permissions and add the other persons email address. See this documentation pagefor more details.

- Upload any file extension as an HTML file (to redirect to another location.) Say you have a picture shared on your account and it is being leeched by multiple, external sites. Instead of just deleting the picture, you can create a web page to replace it that directs people to your site. If you picture name was picture.jpg, create the HTML file and save it as it on your local computer. Then change the file name to be picture.jpg. Now if you just upload this file normally to S3, it will be treated as a jpg image and will not download properly. But you can use BucketExplorer to upload it as an HTML file, which specifically tells S3 to treat it as a HTML file and not a jpg file. Here is an example of picture that I converted to a HTML redirect: https://carltonbale.com.s3.amazonaws.com/distance_chart.png

- Store your media on Amazon S3 while running your WordPress blog on a different server. If you’re like me, your web host has overly limited storage space restriction. If you have a bunch of small pictures or just a few large videos, it can quickly consume your available disk space. To combat this, you can use the Amazon S3 plugin for WordPress. Instead of uploading files to your web server, it instead uploads them to your Amazon S3 account and automatically links to them, all within the WordPress admin interface.

- Bonus Feature 1 – Compare local and remote file versions using MD5 check sums. Wondering if version of a file on your local computer is the same as the one stored in your S3 account? Perform a MD5 check sum and see if the results match. On you local computer, you can use a program such as HkSFV to calculate the MD5 sum. To see the MD5 sum of the file on S3, right-click on it and select Propertieswithin Bucket Explorer. If the sums match, the files are identical.

- Bonus Feature 2 – logging: You can enable logging of all activity within a bucket through Bucket Explorer. Right-click on the bucket and select “bucket logging”. You can create log files in the bucket being logged or in a separate bucket.

Those are all of the “hidden” features I’ve found, but I’m sure there are more. Please let me know of any features I’ve missed.

Just discovered your blog and am finding it an extremely invaluable resource. thanks so much for posting these tips, they’ve given me some great ideas on how to better use Amazon S3.

What about images hosted on Amazon S3 and being used in WordPress… how can we prevent hotlinking? Does signed url work that way when publishing in a blog post?

This won’t help at all with linking to images; signed URLs expire after the specified period of time.

The only way to get around others leaching your images is to embed images in flash/javascript (which has its own issues) or to configure the .htaccess file on the server to prevent access to images when the referrer (which is sent by the browser) is an external site (rather than your own site, which is what you’d expect.)

“configure the .htaccess file on the server to prevent access to images when the referrer ”

But this won’t work with S3 right? I am trying to figure out how to prevent hotlinking in S3. I tried their bucket policies, but they don’t work for me.

That’s right, .htaccess files don’t work with S3. If someone knows the link, S3 will serve the file. The only way to do it would be some javascript on the original web page to prevent right-clicking and discovering the url of the S3 file. This is only marginally effective and somewhat annoying to users. If you really want to prevent linking to the files themselves, you’ll to store them on the the webserver and use access restrictions.

It obviously would be rather resource intensive if you were running a huge image intensive site, but if you’ve got the resources, or aren’t running a porn empire, using PHP or any other server-side scripting language, you can create a really simple image proxy that just passes through the image from s3:

$bucket=’urbucket’;

$key=’sweetimages/urimgpath.jpg’;

$s3=App::make(‘aws’)->get(‘s3’);

$result = $s3->getObject(array(‘Bucket’ => $bucket, ‘Key’ => $key));

$file=$result[‘Body’];

if (!empty($file)) {

header(‘Content-type: ‘image/jpg’);

header(‘Content-length: ‘.strlen($file));

header(“Content-Disposition: inline; filename=\”” . Whateveryouwant.jpg. “\””);

echo $file;

exit;

}

else {

echo “whoops, empty file”;

}

you can use whatever access control mechanism you want in your PHP to stop

> Enable / Disable directory browsing…

> If you don’t want the bucket contents list-able

> but do want to share the file within it, disable Read access

If I do this, all files that are added after this change become private too. Which is not very usable for me, as I also need to add files without having to edit their ACLs one by one. Any other suggestions?

Is there a way to allow the public to listen to an mp3 but not download it?

Virtual1: No, not really. But you can put a flash or java player on the web page and it will hide the link to the file on Amazon S3. This will keep most people from finding the file location.

put a flash or java player on the web page and it will hide the link? can u show us how???

http://florchakh.com/2007/03/23/how-to-hide-affiliate-links-with-javascript.html

Very nice blog post on S3.

What audio player are you using to play your mp3 on S3? I can’t seem to get WPAudio to work.

Thanks!

Frank

Is there a way for the user to access the a shared bucket just using a url request? or does the user have to access the shared bucket using a third party application. I don’t have my bucket shared to everyone I just have it shared to a certain user with read-only rights in the ACL. I have tested the ACL using S3browers but I don’t want to have to use that product. I might even have a script to access the files remotely if possible. any help would be appreciated 🙂

Chris

I know no way to do user authentication over http. I think the S3 protocol is required to do that. The closest feature would be the temporary URLs that you create and send, but probably not what you’re looking for. There may be a web-based S3 client to give and in-the-browser experience, but it wouldn’t be a true http request.

Thanks for the response do you know if the shared folders work with the SDK?

Sorry, but I’m not that familiar with the SDK.

DragonDisk is a fast S3 client with an intuitive graphical user interface ( http://www.dragondisk.com )

This is some nice and valuable Amazon S3 information. I’ve been looking for a free alternative to bucket explorer, do you know of any that work similar?

For item #2, “Enable / Disable directory browsing,” is there a way to allow the listing of the content of a folder (or any folder within a bucket)? I am asking this because we would like to create a folder for each (anonymous) user and put multiple files in there for her/him. Thanks,

Yangler

No, not of which I’m aware. It would require some coding on a website interfacing with S3, but can’t be done directly within S3 as I understand it.

Thanks, Carlton. That was my understanding as well. I’ve mentioned two possible workarounds for content listing:

1. Create a bucket for each user and put the user’s files in that bucket. However, since bucket names are global across all Amazon S3 accounts, I am not sure whether or not there are any restrictions on the number of buckets an account can have. Another problem is that the end user will still likely have no clue on the XML file.

2. Create a simple application that reads the content of the specified bucket and displays only the files and sub-folders within the given folder. This is an extra layer of indirection, of course.

Of course this only applies to public access. For authenticated users, we can always use the S3 management console, or even better, some third party tool such as Bucket Explorer.

That is the best S3 article I have stumbled across on the web. Definitely an area where I have to make the effort to learn more as it could prove invaluable. Thanks for writing it.

Thankyou Carlton for sharing your valuable knowledge.

Exellant post. Keep you the good work.

Regards

Shazad

Is option number 7 “Share your bucket with a someone – whether they’re an S3 user or not.” still supported by Amazon S3. I can’t seem to find the option that you are describing.

Brandon, I believe that it is, but the person must be an Amazon S3 user.

Thanks Carlton for the well presented array of suggestions. Our webmaster found the post worthy to forward to the team as we consider how to make only some Amazon hosted images search indexable when our site is not directly hosted on Amazon.

Hi Carlton

great post! I am hosting JPG files on a S3 CDN – now I want to redirect JPG files to HTML files with the images embedded into it

I tried adding the HTTP header x-amz-website-redirect-location but it doesn’t work

what am I doing wrong?

Option #2 does not appear to work; I am interested in users being able to list the contents of individual folders.

Can anyone tell me how to just simply get a directory of files in a bucket? I inherited a thousand files in a bucket and I would like to write a script to sort them by type but can’t get the names in a file to sort them.

Thanks rodatfiveranksdotcom